Section 5.4 Expected Value

Blaise Pascal was a 17th century mathematician and philosopher who was accomplished in many areas but may likely be best known to you for his creation of what is now known as Pascal's Triangle. As part of his philosophical pursuits, he proposed what is known as "Pascal's wager". It suggests two mutually exclusive outcomes: that God exists or that he does not. His argument is that a rational person should live as though God exists and seek to believe in God. If God does not actually exist, such a person will have only a finite loss (some pleasures, luxury, etc.), whereas they stand to receive infinite gains as represented by eternity in Heaven and avoid an infinite losses of eternity in Hell. This type of reasoning is part of what is known as "decision theory".

You may not confront such dire payouts when making your daily decisions but we need a formal method for making these determinations precise. The procedure for doing so is what we call expected value.

Given a random variable \(X\) over space \(R\text{,}\) corresponding probability function \(f(x)\) and "value function" \(v(x)\text{,}\) the expected value of \(v(x)\) is given by provided \(X\) is discrete, or provided \(X\) is continuous.

Definition 5.4.1. Expected Value.

Theorem 5.4.2. Expected Value is a Linear Operator.

Proof.

Each of these follows by utilizing the corresponding linearity properties of the summation and integration operations. For example, to verify part three in the continuous case:

Checkpoint 5.4.3. WebWork - Expected Value.

Consider \(f(x) = x/10\) over \(R\) = {1,2,3,4} where the payout is 10 euros if x=1, 5 euros if x=2, 2 euros if x=3 and -7 euros if x = 4. Then your value function would be Computing the expect payout gives Therefore, the expected payout is actually negative due to a relatively large negative payout associated with the largest likelihood outcome and the larger positive payout only associated with the least likely outcome.

Example 5.4.4. Discrete Expected Value.

Consider \(f(x) = x^2/3\) over \(R\) = [-1,2] with value function given by \(v(x) = e^x - 1\text{.}\) Then, the expected value for \(v(x)\) is given by

Example 5.4.5. Continuous Expected Value.

Given a random variable with probability function f(x) over space R

Definition 5.4.6. Theoretical Measures.

Theorem 5.4.7. Alternate Formulas for Theoretical Measures.

Proof.

In each case, expand the binomial inside and use the linearity of expected value.

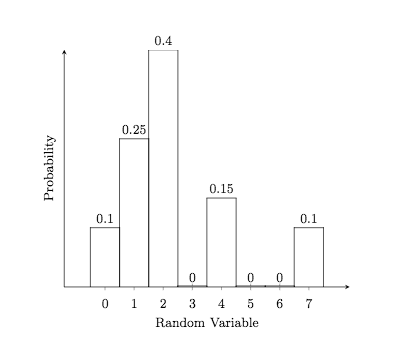

Consider the following example when computing these statistics for a discrete variable. In this case, we will utilize a variable with a relatively small space so that the summations can be easily done by hand. Indeed, consider

X

f(x)

0

0.10

1

0.25

2

0.40

4

0.15

7

0.10

Using the definition of mean 1 as a sum,

Notice where this lies on the probability histogram for this distribution.

For the variance 2 and using the alternate formulation 1

and so the standard deviation \(\sigma = \sqrt{3.6275} \approx 1.90\text{.}\) Notice that 4 times this value encompasses almost all of the range of the distribution.

For the skewness 3 and using the alternate formulation 2

which yields a skewness of \(\gamma_1 = 8.80 / \sigma^3 \approx 1.27 \text{.}\) This indicates a slight skewness to the right of the mean. You can notice the 4 and 7 entries on the histogram illustrate a slight trailing off to the right.

Finally, for kurtosis 4 and using the alternate formulation 3

which yields a kurtosis of \(\gamma_2 = 51.75 / \sigma^4 \approx 3.93\) which also notes that the data appears to have a modestly bell-shaped distribution.

Checkpoint 5.4.9. WebWork - Random Variables.

Checkpoint 5.4.10. WebWork - More Random Variables.

Checkpoint 5.4.11. WebWork - Even More.

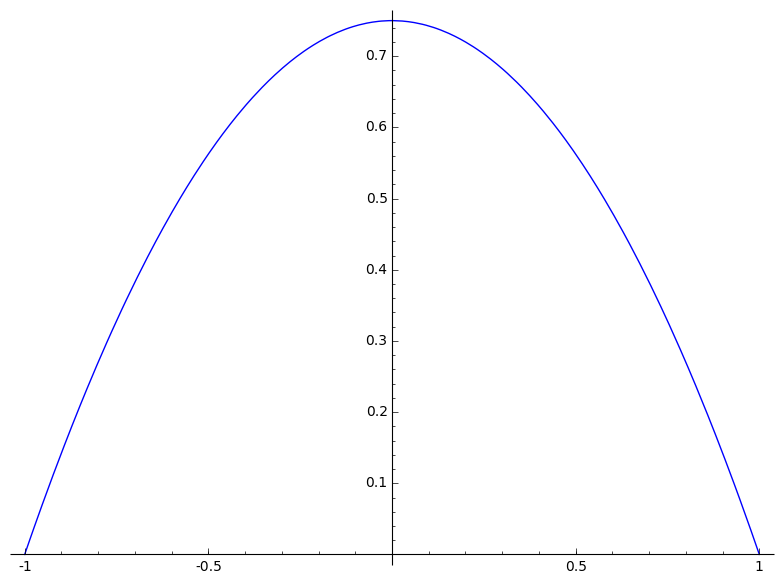

Consider the following example when computing these statistics for a continuous variable. Let \(f(x) = \frac{3}{4} \cdot (1-x^2)\) over \(R = [-1,1]\text{.}\)

Then for the mean 1

as expected since the probability function is symmetric about x=0.

For the variance 5.4.7

and taking the square root gives a standard deviation slightly less than 1/2. Notice that four times this value encompasses almost all of the range of the distribution.

For the skewness 3, notice that the graph is symmetrical about the mean and so we would expect a skewness of 0. Just to check it out

as expected without having to actually complete the calculation by dividing by the cube of the standard deviation.

Finally, note that the probability function in this case is modestly close to a bell shaped curve so we would expect a kurtosis 4 and using the alternate formulation 4 in the vicinity of 3. Indeed, noting that (conveniently) \(\mu = 0\) gives

and so by dividing by \(\sigma^4 = \sqrt{\frac{1}{5}}^4 = \frac{1}{25}\) gives a kurtosis of

Consider our previous example 5.4.5. To compute the mean 1 and variance 2 (and hence the standard deviation) for this distribution, and by using the alternate formulas 5.4.7 which gives For skewness 3, note that in computing the variance above you also found that So, once again by using the alternate forumulas 5.4.7 and so For kurtosis 4, you can reuse \(E[X^3] = \frac{7}{2}\) and \(E[X^2] = \frac{11}{5}\) and the alternate forumulas 5.4.7 to determine which is the numerator for the kurtosis.

Example 5.4.12.

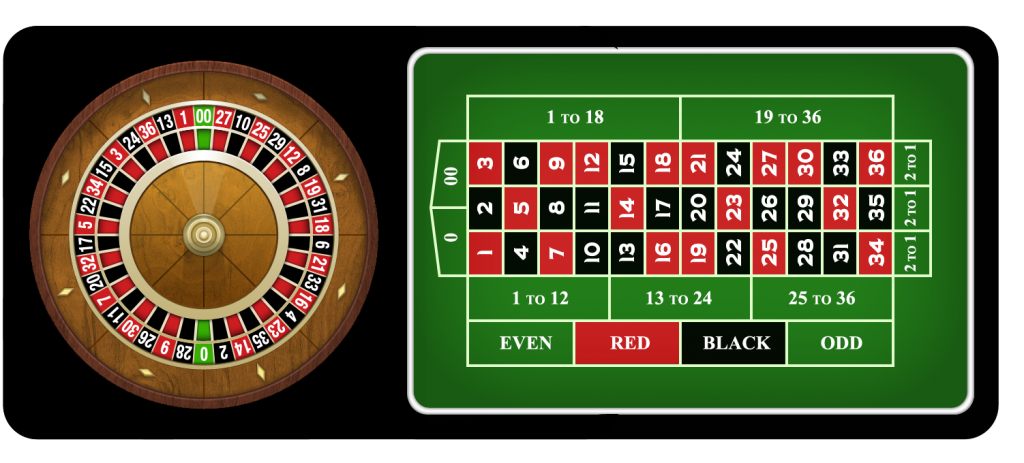

Roulette is a gambling game popular in may casinos in which a player attempts to win money from the casino by predicting the location that a ball lands on in a spinning wheel. There are two variations of this game...the American version and the European version. The difference being that the American version has one additional numbered slot on the wheel. The American version of the game will be used for the purposes of this example. A Roulette wheel consists of 38 equally-sized sectors identified with the numbers 1 through 36 plus 0 and 00. The 0 and 00 sectors are colored green and half of the remaining numbers are in sectors colored red with the remainder colored black. A steel ball is dropped onto a spinning wheel and as the wheel comes to rest the sector in which it comes to rest is noted. It is easy to determine that the probability of landing on any one of the 38 sectors is 1/38. A picture of a typical American-style wheel and betting board is given by

Example 5.4.13. Roulette.

Since this is a game in a casino, there must be the opportunity to bet (and likely lose) money. For the remainder of this example we will assume that you are betting 1 dollar each time. If you were to bet more then the values would scale correspondingly. However, if you place your bet on any single number and the ball ends up on the sector corresponding to that number, you win a net of 35 dollars. If the ball lands elsewhere you lose your dollar. Therefore the expected value of winning if you bet on one number is

which is a little more than a nickel loss on average.

You can bet on two numbers as well and if the ball lands on either of the two then you win a payout in this case of 17 dollars. Therefore the expected value of winning if you bet on two numbers is

Continuing, you can bet on three numbers and if the ball lands on any of the three then you win a payout of 11 dollars. Therefore the expected value of winning if you bet on three numbers is

You can bet on all reds, all blacks, all evens (ignoring 0 and 00), or all odds and get your dollar back. The expected value for any of these options is

There is one special way to bet which uses the the 5 numbers {0, 00, 1, 2, 3} and pays 6 dollars. This is called the "top line of basket". Notice that the use of five numbers will make getting the same expected value as the other cases impossible using regular dollars and cents. The expected value of winning in this case us

which is of course worse and is the only normal way to bet on roulette which has a different expected value.

There are other possible ways to bet on roulette but none provide a better expected value of winning. The moral of this story is that you should never bet on the 5 number option and if you ever get ahead by winning on roulette using any of the possible options then you should probably stop quickly since over a long period of time it is expected that you will lose an average of \(\frac{1}{19}\) dollars per game.

Going back to Pascal's wager, let

- X = 0 represent disbelief when God doesn't exist

- X = 1 represent disbelief when God does exist

- X = 2 represent belief when God does exist

- X = 3 represent belief when God does not exist

Presume that p is the likelihood that God exists. Then you can compute the expected value of disbelief and the expect value of belief by first creating a value function. Below, for argument sake we are somewhat randomly assign a value of one million to disbelief if God doesn't exist. The conclusions are the same if you choose any other finite number...

Then,

if p>0. On the other hand,

if p>0. So Pascal's conclusion is that if there is even the slightest chance that God exists then belief is the smart and scientific choice.